M&E Journal: AI in Broadcast News

In today’s fast-paced media landscape, broadcasters are always looking for tools to help them get content to air faster and with less effort. A news workflow is the perfect example.

In a large and busy broadcast news operation, content never stops coming in. Much of it lacks context and needs metadata to make it accessible and actionable.

So, teams of staffers have the tedious, and time-consuming task of trying to manually review, de- scribe, and log the continuous flow of incoming content.

The goal is to tease out which sound bites and video are relevant and important assets, and which are not. Once content is logged, it is commonly stored in a production asset management system (PAM), for producers and editors to access to create finished news content.

Transcribing the content would be an ideal way to generate the much-needed metadata. Unlike manual tagging, which only captures a few keywords, transcriptions offer a record of every spoken word within a piece of content, giving producers infinitely more detail to search against.

But in a fast-paced news environment, broadcasters do not possess the staff or the time necessary to do the tagging, let alone transcription. And then correlating it all in the content management system? It’s all just too much.

But in a fast-paced news environment, broadcasters do not possess the staff or the time necessary to do the tagging, let alone transcription. And then correlating it all in the content management system? It’s all just too much.

Artificial intelligence was born to solve such problems. Let’s dive deeper.

How can AI and machine learning make things faster and easier?

Applying AI and ML technologies to news work- flows yields automatically generated, highly accurate metadata of video content more quickly and less expensively than traditional methods. That translates to major money, effort, and time savings. It also means better-structured, more detailed, and more accurate metadata and shorter content delivery cycles.

What does an AI-driven metadata automation tool look like?

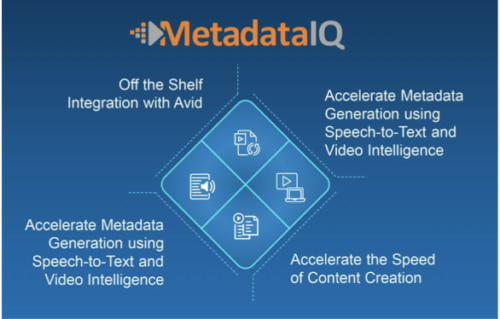

Today’s cloud-based architecture for AI and ML components offers broadcasters easy access to these technologies with the promise of accuracy, scalability, and trainability. Certain AI-driven metadata solutions combine high-performance AI capabilities in the cloud (speech-to-text, facial recognition, object identification, content classification, etc.) with powerful knowledge management orchestration tools.

The ideal tool also integrates the metadata into the production environment. In other words, it not only generates speech-to-text transcripts of incoming feeds (or of stored content) in real time, but it then takes the transcript, parses it by time, and indexes it back to the media in the Avid environment, where producers and editors create the content.

The results: actionable intelligence for media operators and faster search, retrieval, production, and delivery of news content.

What results are news broadcasters seeing?

One U.S. news and entertainment network has put this solution into action, transforming its news production workflow.

With upwards of 100 live news feeds continually coming in, all with little to no context or perspective, even this news powerhouse couldn’t employ enough people to log all these feeds around the clock, day after day. The cost is too great, and a human workforce could never scale appropriately.

This news organization understood that automated, real-time transcripts would accelerate awareness and accessibility of its content, so it turned to the AI-driven solution described above.

The solution uses a variety of AI and ML technologies to process all live news feeds simultaneously from a wide array of sources (24x7x365 basis). No human team, no matter how large, could ever hope to perform concurrent, real-time processing of this quantity of media.

In the current implementation, the solution leverages multiple AI engines and language models, which include the following capabilities:

* Automatic speech recognition (ASR)

* Natural language processing (NLP)

* Language Model Adaptation (LMA)

* Automated transcript generation

* Frame accurate time indexing of metadata to media

Planned expansion of the core solution in phases two and beyond will include various video intelligence capabilities, including:

* Facial/name recognition n Emotion detection

* Location detection

* Program segmentation n Logo detection

* Object identification

* Optical character recognition (OCR)

SOLUTION BENEFITS

1. Automated transcript generation of live feeds greatly accelerates post-production within the Avid PAM environment. This functionality not only delivers real-time, searchable transcript metadata, it empowers producers and editors to locate, retrieve and create finished news content faster than ever before.

2. Integrated, SaaS-based transcript editing tools, which allows operators to refine and perfect machine transcripts of high-value content that will live on indefinitely in the MAM and long-term archive.

2. Integrated, SaaS-based transcript editing tools, which allows operators to refine and perfect machine transcripts of high-value content that will live on indefinitely in the MAM and long-term archive.

3. Increased awareness and visibility of all assets, including legacy media archives for optimized utilization and monetization.

4. Transcribed media that can be further refined as needed to generate closed caption files and/or subtitles for applications requiring media localization in up to 108 alternate languages.

5. Fully automated transcription generation, which eliminates human resource limitations and allows all content to benefit from metadata-rich enhancement.

How does it work?

As media content is ingested, it is routed via an API to cloud-based AI engines for speech to text processing. The resulting transcript metadata is then returned and processed through a series of real-time orchestration software layers in which markers are generated and the media and transcript metadata are frame accurately time indexed within the Avid Interplay PAM environment.

Prior to the advent of AI and machine learning,the benefits of automated transcript generation were not available to the broadcast and M&E marketplace.

Today, this technology opens new doors of efficiency, accessibility, cost savings and opportunity for expanded monetization for the broadcaster and media operators across the industry.

* By Ed Hauber, Business Development, Digital Nirvana

=============================================

Click here to download the complete .PDF version of this article

Click here to download the entire Spring 2022 M&E Journal