M&E Journal: AI-Empowered Workflows for Fast and Compliant Content Protection

Success in today’s highly competitive media environment often comes down to the ability to create and deliver the highest-quality, most compliant content in the shortest time possible.

That’s where cloud-based AI and machine learning (ML) solutions come in.

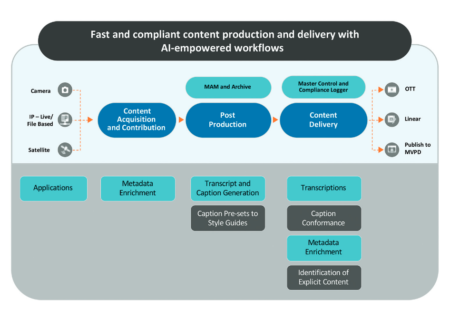

Media factories can use AI and ML tools to make better, more efficient decisions and drive highly efficient and compliant broadcast workflows in every stage of the content process – from acquisition and contribution to post-production and then final content delivery.

In this article, we’ll take a look at how AI-empowered workflows can streamline and accelerate all three phases in a content-delivery operation for both linear broad-cast and OTT delivery.

ACQUISITION: MAKING CONTENT INSTANTLY SEARCHABLE FOR NEW EFFICIENCIES

For a typical broadcast news operation, AI technologies can play a big role in accelerating the creation of finished content.

Cloud-based speech-to-text (STT) technologies are best known for their ability to stream-line closed caption generation at post-production, but they also offer a compelling opportunity to apply the technology at content ingest.

In a typical media operation producing content for broadcast news and OTT streaming services, the con-tent acquisition stage can be a large bottleneck — often slowed by the sheer volumes of raw content that come in from various field news gathering sources.

In a typical media operation producing content for broadcast news and OTT streaming services, the con-tent acquisition stage can be a large bottleneck — often slowed by the sheer volumes of raw content that come in from various field news gathering sources.

It then falls on the production team to review the content and cull out the needed sound bites and segments for a particular story. Until now, this process has been entirely manual, occupying the valuable time of production staffers to review the content and log applicable segments together with the time reference of the tagged footage for easier reference later. According to a shooting ratio well-known to news operations, it takes at least 15 minutes of raw footage to produce one minute of finished, ready-to-air content using manual processes.

That time adds up for a 60-minute program. Instead of deploying staff to review and log content manually, an operation can run it through the STT engine.

Almost instantly, the AI technology generates a transcript of all spoken words in the video together with metadata and time references.

When cutting video for a story, producers are able to search on the transcript and rapidly find the relevant content they need for use in the finished piece.

POST-PRODUCTION: SHAVING TIME OFF COMPLIANT CAPTION DELIVERY

Automated captioning is another powerful application of AI processing that leverages the intelligence and flexibility of cloud-based STT technology. By building the closed-captioning process into their existing operations, broadcasters can decrease their costs and increase the productivity of in-house post-production teams by orders of magnitude.

Because today’s STT technologies have been trained on billions of words and on thou-sands of hours of spoken data representing a wide array of accents, they are able to generate captions that are remarkably accurate.

With new OTT platforms entering the streaming marketplace at dizzying speed, one critical requirement is the ability to deliver high-value content tailored to the specific standards and style guidelines of multiple OTT delivery platforms.

With new OTT platforms entering the streaming marketplace at dizzying speed, one critical requirement is the ability to deliver high-value content tailored to the specific standards and style guidelines of multiple OTT delivery platforms.

This includes not only accurate, properly formatted closed captions, but the delivery of transcripts and captions in multiple languages to support content delivery to multiple regions. In a typical workflow, the STT engine outputs a time-coded transcript that can be viewed side by side with the video and captions, along with tools for editing text and adding visual cues, music tags, and speaker tags.

As the operator speeds through the correction process, the corrected transcript is automatically converted into time-synced captions in accordance with policies defined in the appropriate preset. The correct-ed and finalized file is then used to generate and export the multiple output formats — including secondary languages — required for distribution, with each format in compliance with the individual style guide of the delivery platform.

DELIVERY: ASSURING QUALITY, TECHNICAL COMPLIANCE, AND AN OPTIMAL USER EXPERIENCE

Closely linked to the AI-based transcription/captioning workflow is metadata-driven compliance monitoring and logging, which natively records live video, audio, and all associated metadata from any point in the production chain.

By capturing all broadcast and OTT content along with all captions and subtitles in a range of formats and in multiple languages, this system validates the presence and accuracy of necessary metadata at the point of distribution. Recorded and archived content provides a rich source of information for collaboration and assessment across the organization.

Cloud-based AI capabilities such as STT transcription and video recognition empower operators to find con-tent quickly and mine for media insights.

Cloud-based AI capabilities such as STT transcription and video recognition empower operators to find con-tent quickly and mine for media insights.

Furthermore, the monitoring system can use closed captions to generate transcripts of aired programs for a variety of applications.

Plus, a broadcaster can make the captions searchable to allow its legal and engineering teams to locate high-value content quickly, generate clips, and export assets for FCC and ADA proof of compliance.

A LOOK AHEAD

AI and ML technologies are continuing to transform and accelerate virtually every aspect of content creation and distribution.

Newsrooms, live sports and entertainment productions, post houses, and other media operations are able to expedite critical processes, reduce mundane tasks, and free creative personnel to do their jobs.

As these technologies grow in power and sophistication, they’re bringing new levels of accuracy, efficiency, compliance, and cost savings to broadcast operations.

* By Russell Wise, SVP, Sales, Marketing, Digital Nirvana

——————————————————

Click here to translate this article

Click here to download the complete .PDF version of this article

Click here to download the entire Winter 2020/2021 M&E Journal